If you’ve been paying attention to the rumour mill over the last month or two, you will have heard musings regarding the “next-big-thing” in computing: NVIDIA’s RTX 3000 Series Graphics Cards.

Last night (September 1st 2020, 5pm BST), NVIDIA finally broke their silence with a full rundown of their newest line of graphics cards (or at least the most powerful trio) that is to launch very soon.

What was announced?

Here’s a really quick run-through of everything NVIDIA announced:

Click to jump to each section

- NVIDIA Reflex

- GPU Tuning in Geforce Experience

- Geforce 360hz GSync Displays

- NVIDIA Broadcast

- NVIDIA DLSS 2.0

- RTX IO

- RTX 3080 GPU

- RTX 3070 GPU

- RTX 3090 GPU

We’ll take you through all of the important bits and get you in the know!

NVIDIA Reflex

In esports and high-speed gaming, speed and reaction is king. Typically, we hear a lot about wanting PCs that have the highest-frame rates in specific games. What we don’t hear a lot of, is people wanted the lowest possible “latency”. To clarify, that is the time between the PC receiving the information and then displaying it on-screen.

In summary, if your latency is too slow, you can have the fastest response time in the world but you’re still not going to win. You’re responding to an already out-of-date piece of information.

However, NVIDIA can’t do a lot about an individual gamer’s reaction time, but they can help to reduce system latency.

NVIDIA Reflex is NVIDIA’s “groundbreaking” technology that helps to reduce hi-speed gamer reaction time. These improvements come in the form of a game-ready driver, available to GPUs from the 1000 Series and newer in September. In addition, the NVIDIA Reflex SDK, which allows developers to implement latency improving features direct to games.

“NVIDIA reflex optimises the rendering pipeline across CPU and GPU to reduce latency by up to 50%”

Jensen Huang, CEO NVIDIA

Combined with NVIDIA’s new Gsync esports displays (more on this later) the NVIDIA Reflex Latency Analyser allows gamers to measure their full system latency and develop the best strategy to reduce input lag, system and display latency – resulting in better game performance.

GPU Tuning in Geforce Experience

As part of NVIDIA’s above initiative to improve system performance and reduce system latency, they’re introducing GPU overclocking from within Geforce Experience. Whether you’re a fan of Geforce Experience or not, having a simple and easy to use interface for your overclocking is a nice touch.

Simply tell Geforce experience to automatically overclock your GPU for the best performance or give it a more discrete goal of target voltage, Mhz, or temperature and NVIDIA Geforce Experience will do the rest.

If you’re already into overclocking your CPUs and GPUs, this won’t be much of a deal for you. However, for those who don’t want to get into the deep-nitty-gritty of overclocking, and simple interface and visible results is a nice touch.

NVIDIA Geforce 360hz GSync Displays

You read that right. 360hz GSync Displays. I think it speaks volumes to how optimistic NVIDIA is about its new line of GPUs, that it’s willing to put 360hz Gsync monitors on the market.

There’s speculation around whether you can actually see 360hz, over 240hz. So, without going into that, NVIDIA is really putting their money where their mouth is by partnering with Acer, Alienware, ASUS and MSI, to produce 360hz IPS esports monitors.

On top of a simply ludicrous refresh rate for esports, these monitors feature a USB pass through, to enable latency analysis as outlined above.

NVIDIA Broadcast

With more and more gamers turning their passion into a career through streaming and content creation, NVIDIA is doing its part to make it just that little bit easier. NVIDIA broadcast builds upon the Artificial Intelligence built into RTX Graphics cards, to produce some genuinely useful streamer tools:

Noise Removal

This isn’t necessarily new or announced in yesterday’s conference. But it’s now officially part of the wider package, so in case you missed it, here’s what NVIDIA’s Noise Removal is all about:

Utilising the Artificial Intelligence baked into the RTX Graphics cards, you can now remove background noise from your microphone’s input. Have a noisy keyboard? Kids playing in the background? A dog that won’t stop barking? NVIDIA’s Noise removal does a startlingly good job at removing background noise from your microphone.

Virtual Background

Just like removing background noise from your microphone, you can now use artificial intelligence to remove (and alter) the background in your camera feed. It essentially gives you all the features of a green screen, without the need for a massive green screen.

You can remove your background and replace it with a different location. Give yourself a fancy “Shallow Focus” blurred background. You can even over-lay your webcam on your gameplay – direct from NVIDIA Broadcast.

Auto Frame

If you like to have a little bit of variation in your stream’s framing, you can simply turn on “auto frame”. Again, using the AI in your RTX graphics card, the webcam’s cropped feed will always show its intended target. If you move around the frame, the camera’s cropped area will simply follow you around.

All very nice features.

NVIDIA DLSS 2.0

NVIDIA introduced Deep Learning Super Sampling (DLSS) with its first generation RTX graphics cards.

Deep learning super sampling uses artificial intelligence and machine learning to produce an image that looks like a higher-resolution image, without the rendering overhead.

NVIDIA RTX DLSS: Everything you need to know – Digital Trends

With the latest NVIDIA 3000 GPUs comes DLSS 2.0, the above concept on steroids. DLSS allows your GPU to render gameplay at a lower resolution than displayed, then employ Tensor Core Deep Learning Super Sampling to upscale the resolution to your desired resolution resulting in a much higher frame-rate.

With DLSS 2.0 enabled, NVIDIA is claiming a higher quality image than simply rendering that image at its native resolution. Crazy stuff.

Previously, the DLSS Deep Learning Network needed to be trained on specific games before they became functional. However, NVIDIA has overhauled the process, supposedly allowing it to be functional on all games and all RTX GPUs.

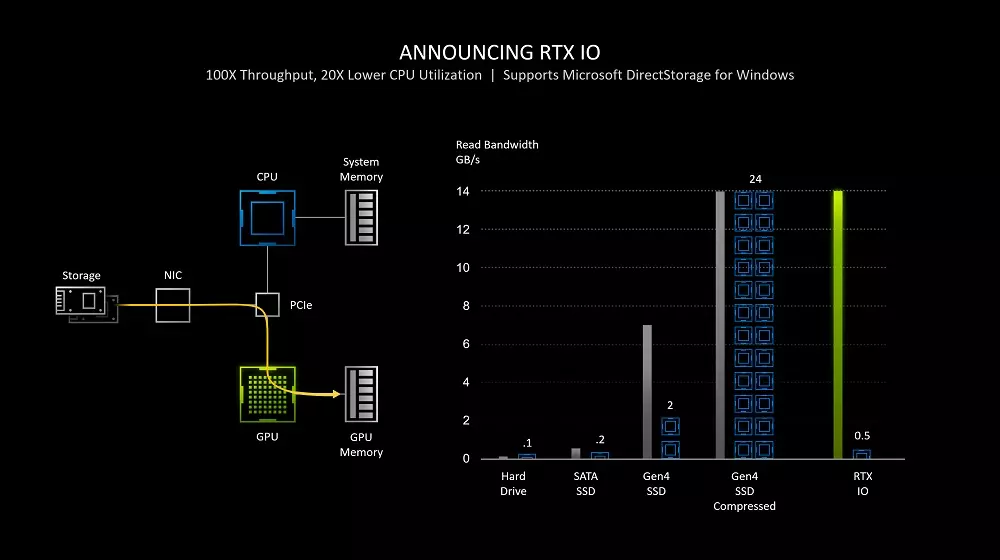

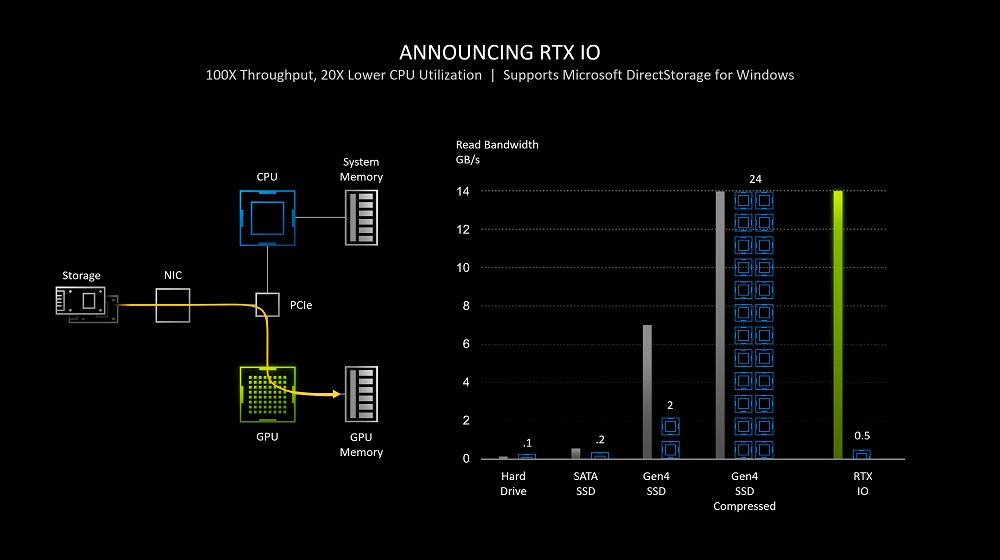

RTX IO

Games are massive nowadays. Single titles can reach over 200GBs and with titles growing and growing after launch with DLC and Updates, it looks like this trend is continuing.

RTX IO is a suite of technologies from NVIDIA to leverage hardware in the latest 3000 Series NVIDIA GPUs. NVIDIA IO utilises Microsoft DirectStorage API to drastically reduce the CPU load when decompressing game assets. The DirectStorage API allows the GPU to instead directly interface with NVMe storage and implement lossless asset decompression directly between the two devices.

Introducing: The NVIDIA RTX 3080

What you’ve all been waiting for. After showing us through the updates and innovations in the Amphere Architecture, NVIDIA announced the first in their latest line of graphics cards – The RTX 3080.

The RTX 3080, as the name suggests, is the successor to the RTX 2080, NVIDIA’s flagship graphics card. The 3080 is absolutely filled with new technology that takes it leaps and bounds beyond its previous generation. Here’s everything we know so far:

How much does it cost?

£649, $699. A modest and respectable price we feel for a card this powerful (more on this later). This puts it around the same RRP as a 2080 Super would have been at launch, but you’re getting a lot more for your money here.

PAM4 Memory

The G6X memory on the brand new RTX 3080 features something called “PAM4” signalling. Where a typical voltage signal is bit by bit (Either a 1, or a 0), a PAM4 signal is 4bit and each signal is either 00, 01, 11, 10, allowing twice as much data to be sent at the same time.

If that’s a little too complex for you, the bottom line is this. Thanks to some fancy wizardry, the NVIDIA 3080 onboard memory is 2x as fast as previous generations.

Innovative Cooling

We don’t put much thought towards NVIDIA’s cooler designs normally. We know that an aftermarket design will come along to offer something that works a lot better, and is a lot quieter for the majority of our customers.

However, NVIDIA is doing something very different this time. The first time you see an 3000 series installed in a system, you could be forgiven for thinking it’s somehow been installed upside down.

Ontop of the regular underside fan that exausts out the rear IO, the 3080 features a smaller PCB than have been seen on a mainstream board in a while. This allows for a “flow-through” design that pulls air across the heat sink and into the path of the CPU cooler (to be exhausted by the rear fan).

Now, we know these GPUs draw a large amount of power, and therefore output a decent amount of heat. We’ll be doing own own testing on this design to see if it has any adverse affects on CPU temperatures, due to exhausting directly into the CPU’s path.

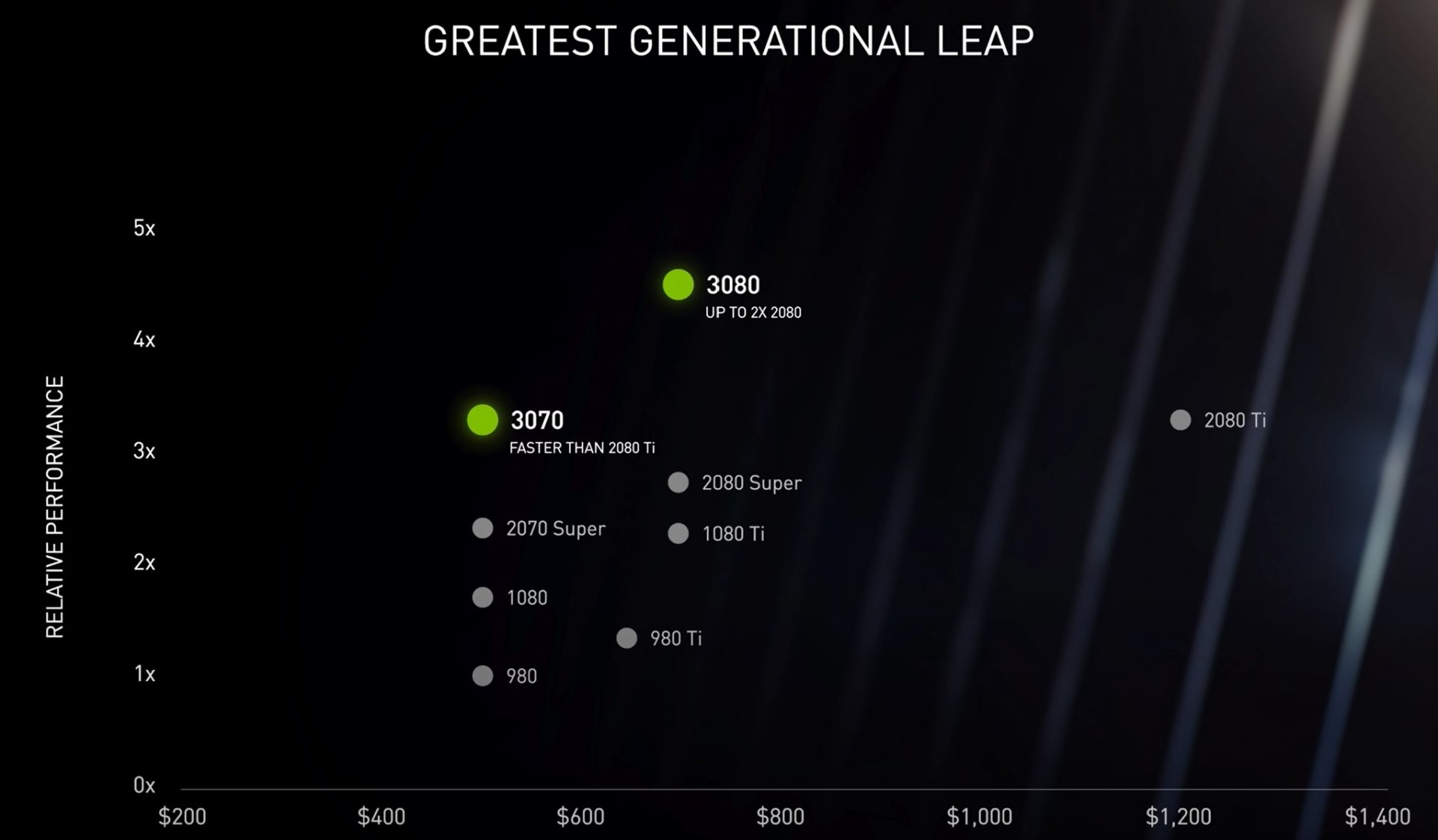

Compared to previous generations

Just take a look at NVIDIA’s comparison graphic for a view on how this GPU compares against predecessors. You have the cost of the GPU along the bottom, and relative performance up the side. The closer to the top left, the better.

The RTX 3080 supposedly delivers almost twice the performance of a 2080, for the same price. It destroys the 2080 Ti. Naturally, we don’t have any benchmarks available, besides what’s been provided by NVIDIA. Although, even taking these with a grain of salt shows a GPU that is head and shoulders above the rest.

NVIDIA’s benchmarks show this GPU at outputting 80fps 4K gameplay with RTX in some games. We’re not going to rattle through all of NVIDIA’s own benchmarks here. There’s a link to their product page below for all that information.

Subscribe to our newsletter to get notified when we get hands on with our own benchmarks.

For raw specifications, you can view the NVIDIA RTX 3080 product page.

Introducing: The NVIDIA RTX 3070

If you’re looking at the RTX 3080 and thinking, “That’s great, but it’s a bit too much for my needs”, then the 3070 has you covered. The RTX 3070 supersede’s NVIDIA’s RTX 2070, and offers a whole host of improvements over the previous generation.

How much does it cost?

£469. $499 rrp. At just £470, you can now purchase a GPU as powerful as a 2080 Ti.

GDDR6 Memory

Unfortunately, the 3070 doesn’t feature the same high-speed PAM4 memory like it’s bigger brother. The 3070 comes with 8GBs of old faithful GDDR6 memory (not actually old in the slightest).

Introducing: The NVIDIA RTX 3090

What NVIDIA has lovingly dubbed the “BFGPU” – The RTX 3090 is the “Titan RTX” of this generation. NVIDIA is doing away with the Titan nomenclature this generation and rolling it out as the RTX 3090. Granted, the 3090 doesn’t sound quite has great as the TITAN, but NVIDIA has thoroughly exhausted those naming options.

What does it cost?

£1399, $1499. It’s a hefty pill to swallow for the average gamer. But the titan range was never really intended for your “everyday” gamer. The RTX 3090 is for the content creators, streamers, visual artists etc. This card can supposedly do 8K – RTX at over 60fps.

I don’t have any friends who have an 8K television?

If you’re in need of a serious card with serious capabilities, the RTX 3090 is a workhorse of a card. Without a doubt.

24GB of G6X Memory

Remember that innovative memory we mentioned in the RTX 3080? Well the 3090 comes with G6X memory in spades. 24GB of G6X memory.

Compared to previous generations

NVIDIA hasn’t provided any benchmarks, tests or comparisons for this card. They did provide 4 influencers with the opportunity to play the latest games at 8K RTX 60FPS, and by all accounts, they seemed to be pretty impressed. However, NVIDIA’s lack of benchmarks here is a little unsettling.

Rest assured, when we have one in our hands, we’ll be doing some thorough testing.

Well, that’s the majority of what was covered in the conference. If you want to watch the entire announcement and soak-up any juicy bits that we missed, you can view it on NVIDIA’s youtube channel here.

For more news and updates on the latest NVIDIA releases and more, subscribe to our newsletter.

Will you be upgrading to a 3000 series GPU? Let us know in the comments below!